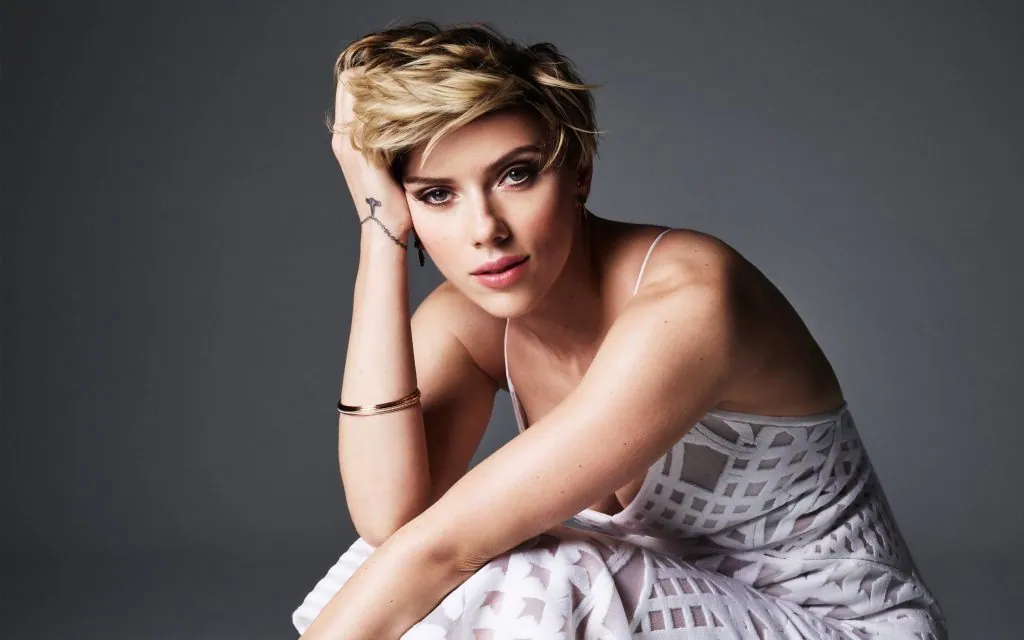

Actor Scarlett Johansson’s unusual dispute with OpenAI over the voice used for “Sky,” its GPT-40 chatbot, may soon be discussed in Congress. A House subcommittee has invited her to testify about it. On Monday, Rep. Nancy Mace, R-S.C., sent a letter to Johansson, requesting her presence at a hearing about deepfake technology hosted by the House Oversight’s Subcommittee on Cybersecurity, Information Technology, and Government Innovation on July 9. Mace wrote, “You recently expressed concerns via social media about the resemblance between your own voice and that of the ‘Sky’ chatbot, recently released as part of OpenAI’s GPT-40 update.

This hearing would provide a platform for you to share those concerns with House Members and to inform the broader public debate concerning deepfakes.” According to Axios, Johansson’s team informed Mace’s office that she cannot attend the July 9 hearing but may be available in October. Last month, OpenAI unveiled the latest update to its chatbot, which featured a voice that sounded strikingly similar to Johansson’s. Days after “Sky” was released, Johansson issued a statement revealing she had turned down an offer from OpenAI’s CEO, Sam Altman, last year to lend her voice to the chatbot. “Nine months later, my friends, family, and the general public all noted how much the newest system named ‘Sky’ sounded like me,” she wrote.

“When I heard the released demo, I was shocked, angered, and in disbelief that Mr. Altman would pursue a voice that sounded so eerily similar to mine that my closest friends and news outlets could not tell the difference.” Altman responded, stating the chatbot’s voice “was never intended to resemble” Johansson’s, and The Washington Post confirmed that the company had hired an actress to voice “Sky” months before Altman approached Johansson. This controversy has sparked further questions about AI’s impact on culture and its potential to affirm biases. It has also fueled discussions about AI deepfakes, which are increasingly used to spread fake sexually explicit videos of female celebrities and teenage girls.